Sitemaps; Setup, Monitoring & Metrics for Analysis

By Martijn Scheijbeler Published March 16, 2018In my effort to write longer posts on a specific topic I thought it was time to shed some light on something that we’ve been working on during the last months at Postmates and something that I never thought of as a topic that could become interesting: sitemaps. They’re pretty boring in itself, it’s a technology where you give search engines basically all the URLs for a site, that you want them to know about (indexed) and you take it from there. Even more so, as most sites these days run on a CMS like WordPress where tons of plugins can take care of this for you. Don’t get me wrong, do use them if you are on one! But as I work mainly for companies that don’t have a ‘standard’ CMS I worked multiple times on creating sitemaps and having their integrations work flawless. Over time that taught me a ton of things and recently we discovered that certain additional features in the process can help speed up the process. That’s why I think it was time to write a detailed essay on sitemaps ;). (*barf: definitive guide).

TLDR; How can sitemaps help you get better insights, how to set them up?

- Sitemaps will provide you with insights on what pages are submitted and which ones are indexed.

- You create create sitemap files by uploading XML or TXT files with dumps of URLs

- All your different content on pages can be added to sitemaps: images, video, news.

- Different fields for priority, last modified and frequency can give search engines insights in the priority for certain URLs to be crawled.

- Create multiple sitemaps with segments of pages, for example by product category.

- Add your sitemap index file to your robots.txt so it’s easy to find for a search engine.

- Submit your sitemap and ping sitemap files to search engines for quick discovery.

- Make sure all URLs in your sitemaps are working and returning a 200 status code, think twice: do you all want them to be discovered?

- Monitor your data and crawls through log files and Google Search Console.

Goals

When you start working on sitemaps there is a few things to keep in mind. The ideas that you have around them and the goal: what problem that you have are they solving? For small sitemaps (100 pages) I’m honestly not sure if I would support sitemaps. There is probably a lot of other projects that would have more impact on SEO/the business.

If you’re thinking about setting up sitemaps there is a few goals that it will help you accomplish:

- Get better insights into what pages are valuable to your site.

- Provide search engines with the URLs that you want them to index, the fastest way to submit pages at scale.

Overall this means that you want to support the best sitemap infrastructure you can as that will help you get the best insights ever, the quickest way to get these insights and most of all get your pages indexed + submitted as fast as possible.

Setup

Sitemap

Format? XML/Text? Does the format matter, for most companies probably not as they’re using a plugin to support their sitemaps. If you want to go more advanced and get better insights I would go with the XML format myself. From time to time we’re using text file sitemaps where we just dump all the URLs. They’ll help in getting you a sitemap quick and dirty if you don’t have the time or resources quickly.

Types: There are multiple formats for sitemaps to support different content types.

- Pages: In there you’ll dump all the actual URls that you have on the site and that you want a search engine to know about. You can add images for these specific pages to that Schema as well to ensure that the search engine understands what images are an important part of the page.

- Images: For both image search as making an impact with the pages you can add sitemaps for images.

- Videos: Video sitemaps used to have a bigger impact back in the days as the video listings were a more prominent part of the search results page. These days you mostly want to let search engine know about them as they’re usually part of an individual page.

- News: News is not really its own format as they’re just individual pages. But Google News sitemaps do have their own format. Creating a News Sitemap – Google.

- HREFLang: This is not really a type of content but it’s still important to think about. If your pages have a translated version, you want to make sure they’re being listed as the ‘duplicate’ version of that. Read more information about that here in Google’s support.

Fields

- Frequency: Does the page change on a regular basis? Some pages are going to be dynamic and will always change. But for some of them they will change only daily, weekly, monthly. It’s likely worth it to include this as a good signal in combination with the Last Modified field and the header.

- Last Modified: We do want to let a search engine know what kind of pages have been updated/modified and which ones aren’t. That’s why I’d always recommend to organizations that they should include this in their sitemap. In combination with the Last Modified header, we’ll talk about that in the next step it will be a good enough signal to assess if the page has been modified or not.

- Priority: This is a field that I wouldn’t spend too much time thinking about. On multiple occasions, Google has mentioned that they don’t put any value or effort into understand this field. Some plugins use it and it won’t hurt. But for custom setups it’s not something that I would recommend adding.

Last Modified

Has the actual sitemap changed since the last time it’s been generated? Yes or No? In some cases your sitemap won’t change. You didn’t add any new products/articles. Have you ever run this in your terminal:

curl -I https://www.example.com/sitemap/sitemap_index.xml

Look at the headers, if you see a Last Modified header, it will be a signal to see when the page has been last modified. We use it to tell the last time it was updated. We combine this with serving a Last Modified Header at the URLs that are in the sitemaps. Sometimes this won’t always work as pages can change momentarily (based on availability of products for example).

Segmenting Pages

For better insights it’s really useful to segment your sitemaps. The limit per sitemap is in the end 50.000 URLs, but there is basically not a required minimum. The way you’ll see sitemaps being segmented is in multiple ways. Based on these you can get more segmented insights, is 1 category of pages better indexed then another one.

Categories: Most companies that I work with are segmenting there pages by the categories they’ve defined themselves. This could be based on region or for example by product categories for an ecommerce site.

Static Pages: Something that most people with custom build sites don’t realize is that there is usually still a ton of pages that aren’t backed up by a database that you you want insights on too. Think about: contact, homepage, about us, services, etc. List all these pages in a different sitemap (static_sitemap.xml) and include this file in your sitemap index too.

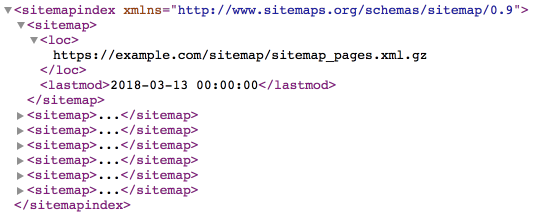

Sitemap Index

If you have multiple sitemaps (10-25+) you want to look into creating a sitemap index file, with this you can just submit 1 file and with that the search engine will be able to find all the underlying files that are part of the sitemap. This saves you adding multiple sitemap URLs to Google Search Console/Bing Webmaster Tools and will also give you the ability to add only 1 line to your robots.txt file. In the end it’s another sitemap technically which lists all the different URLs of the other sitemaps.

Robots.txt

You want to make sure that on first entry a search engine will know about your sitemaps. Usually one of the first files a search engines’ crawler will look at is the robots.txt file as it needs to know what it can/can’t look at on a site. As we just talked about the sitemap index, we’re going to list that one in the robots.txt file for your site which should live on https://www.domain.com/robots.txt. It’s just as simple as adding this one line to it:

Sitemap: https://www.domain.com/sitemap/sitemap_index.xml

Obviously the URL can be different based on where you have hosted your sitemap index file.

GZIP

If you’re a big site you likely have servers that won’t go down and can take quite a hit but if you have extensive sitemap files they could easily get up to +50MB that is not a file transfer that can be done in a matter of two seconds. Also it can just slow down things on both your end and the end of the search engine. That’s why we’ve started GZipping our sitemap files to make for a faster download and speed up that process, at the same time you make it 1 step more complicated for people to copy paste your data.

PING Search Engines

Guess what, it has an affect. I thought it was crazy too, but we found a tiny bit of proof that actually pinging a search engine will result in something. As you mostly will likely only care about Google and Bing we still have a way of letting them know about a page:

- https://google.com/ping?sitemap={URL to sitemap}

- https://bing.com/ping?sitemap={URL to sitemap}

Submit your sitemap

Probably not worth explaining, you need to make sure that you can get insights into your XML sitemaps and the URLs that are listed in there. So make sure to submit your sitemaps to Google Search Console and Bing Webmaster Tools.

Pubsubhubbub

One of the projects that is very unknown is the PubSubHubbub project, it will let, mostly publishers, be instantly notified (through a specific push protocol) when new URLs are published in a feed. This protocol works through an ATOM feed (do you still know about that protocol?) that you provide. Once you have registered the feed with the right services you can make it easier for them to be notified of new pages.

XSLT

XML Sitemaps aren’t easy to read for a regular person. If you’re not familiar with the format of XML it might be uncomfortable. Luckily a while back people invested XSLT. This will let you ‘style’ the output of XML files to something that is more readable. This would make it easier to see certain elements in the sitemaps that you’ve listed. If you want to make them more readable I would advise looking into: https://www.w3schools.com/xml/xsl_intro.asp.

Quality Signals

Search engines like sites that are of high quality. The pages are the best, the URLs are always working and your site never goes down. Chances are high that all of this doesn’t always apply to your sitemaps as some pages might not be great. Some things to consider when you’re working on this:

- 301/302/404: Are all URLs in your sitemap responding like they should with a 200 response? In the best case scenario none of your URLs should be responding with another response code then that. In reality most sitemaps always contain some errors.

- NoIndex: Have you included URLs in your sitemap that are actually excluded by a noindex meta tag or header? Make sure that it’s not the case.

- Robots.txt: An even bigger problem, are you telling the search engine about URLs that you actually don’t want them to look at?

- Canonical Pages: Is the actual URL that you’re listing the canonical URL/original URL or are you listing the pages that are still ‘stealing’ the content from another page, like a filter page. Do you really want to list these URLs in your sitemap?

With all of these signals, some might have a big/small impact others won’t matter at all. But at least think about the implications that they might have when you’re building out your sitemaps.

Airflow

Lately I’ve been working a ton with Apache Airflow, it’s the framework that we use at Postmates, invented by the great folks at Airbnb and mostly use for dealing with data pipelines. You want to do X, if X succes you want it to go on to task Y. We’re using that for the generation of sitemaps, if we can generate all sitemaps we want to have them pinged with the search engines, if that succeeds we want to run some quality scripts, if that is done we want to be notified on both email and Slack to tell us at what time the script succeeded.

For some sitemaps we want it to run everyday, for a specific segment we want to have it run on an hourly basis. The insights from Airflow will give us the details to see if it’s failing or not and will notify us when it succeeds/fails. With this setup, we have constant monitoring in place to ensure that sitemaps are being generated daily/hourly.

Monitoring

Eventually you only want to know if your pages are of good enough quality that they’re being indexed by the search engine. So let’s see how can see this in Google Search Console.

Index coverage

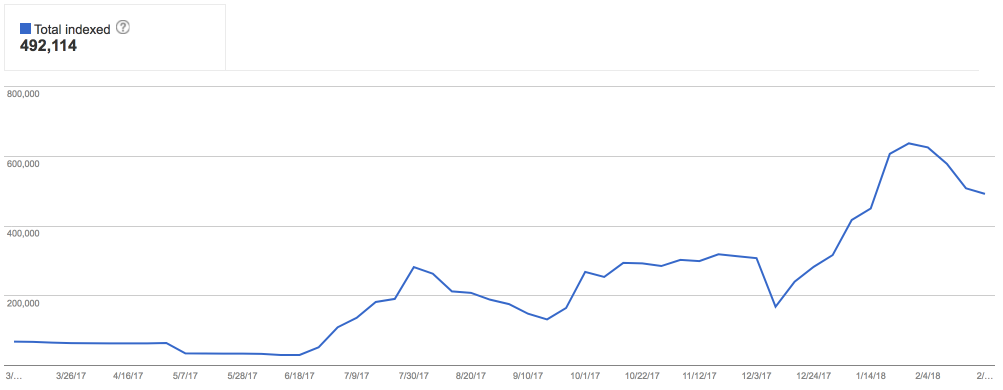

A useful report in Google Search Console is the Index Status report (Google Index > Index Status). It will show for the property that you’ve added how many pages have been indexed and what pages have been crawled. As the main goal for a sitemap is driving up the number of pages being submitted for the Google index the following step is making sure that they’re being indexed. This report will give you that first high level overview.

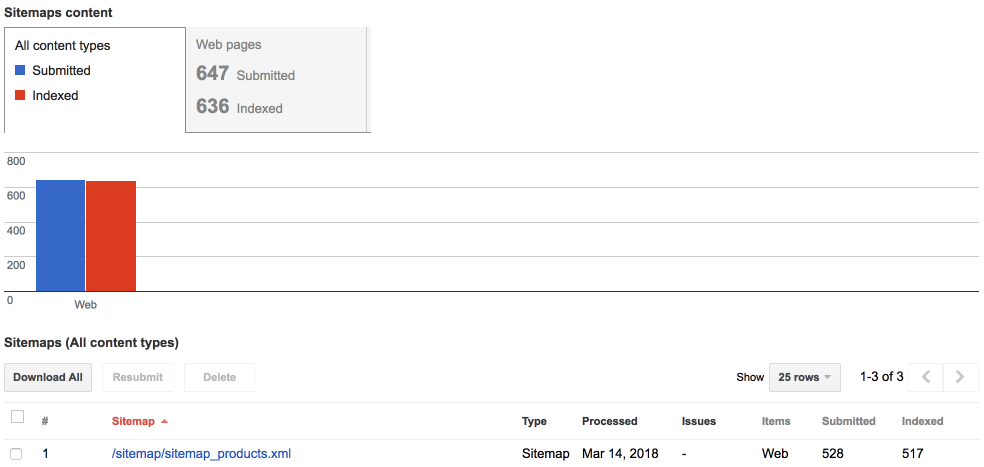

Sitemap Validation: Errors & Amount of URLs

But what about the specifics of the sitemap, are the URLs being crawled properly and are the URLs being submitted to the index. The sitemap reports give you this level of detail (in this case 98% is indexed, which makes sense, the 2% missing are some products that were test ones that Google seemed to have ignored, luckily!). Remember what we talked about before regarding segmenting your pages? If you would have done that you would have seen in this particular example what percent of pages in that sitemap was submitted / indexed. Very useful if you work on big sites where the internal link structure for example is lacking and you want to push that. These reports can (they not always) give you insights into what the balance could be between them.

Quality Assurance

- Are the URLs working (200 status code)? An unknown fact, but Google doesn’t like following redirects or finding broken URLs in your sitemaps. Spend some time on making sure that these pages aren’t in there or add the right monitoring to prevent it from happening. Since we’ve starting Gzipping our sitemaps that’s become a tiny bit harder as you first need to unpack them. But for quality testing we still have scripts in place that on demand can run a crawl of the sitemap to see if all URLs in there are valid.

- Page Quality: Honestly, is this page really worth it to be indexed in Google? Some pages are just not of the quality that they should be and so sometimes you should take that into account when building up sitemaps. Did you filter out the right pages?

Metrics & Analysis

So far we’ve talked about the whole setup and how to monitor results. Let’s go a little step further before we close this subject and look at the information in log files. It’s a topic that I became more familiar with and have worked closely with over the last months too:

Log Files

As log files can be stored on the web server that you’re also using for your regular pages you can get additional insights into how often your sitemaps are being viewed and if there are any issues with sitemaps. As we work on them on a regular basis it could be that they break. That’s why we make sure that for example we monitor the status codes for the URLs so that we can see when a certain sitemap doesn’t hit a successful 200 status code.

Proving that pinging works

A while back we started to ping our sitemaps to Google and Bing, both make it clear (Google) that if you have an existing sitemap and you want to resubmit it this is a good way to do it. This sounds weird, Google got rid of their ‘submit a URL’ feature for the index years ago. So we were skeptic to see if this had any impact. As it was really easy to implement, you just fire a GET request to a Google URL with the sitemap URL in there. What we noticed is that we saw Google almost immediately try to look at these URLs. As we refresh this specific sitemap every hour, we also ping it every hour to Google. Guess what happens, every hour for the last weeks they look at the sitemap by now. Who says you can’t influence crawlers? Result? If you want to ensure that Google is actually looking at a page and actively crawling it, pinging seems to prove that, that is actually happening.

Screenshot of this from a Kibana dashboard where we log server requests

What if you can’t ping? Usually I would only recommend pinging a search engine if your whole sitemap generation process is fully automated, it doesn’t make sense to open your browser or have a tiny script for this. If you still want to basically experience the same, use the Resubmit button in Google Search Console > Sitemaps to achieve the same.

Future

This is not all of it and I’ve gone over some topics briefly, I didn’t want to document everything as there’s already a ton of information from Google and other sites about how you can specifically setup sitemaps. In my case, we’re on a route to figure out how we can make our sitemap setup near perfect, what I’m still wanting to investigate or analyze:

- Adding a Last Modified Header to pages in the sitemap, what is the effect of pinging a sitemap and Google looking at all pages or just the ones that are modified?

- Segmenting them even further, let’s say I only add 100/1000 pages to a sitemap and start creating just more of them, does that influence crawling, do we get better insights?

Resources

You want to learn more about sitemaps, look into the following resources to learn more about the concept, the idea behind it and the technical specification:

- https://www.sitemaps.org – Sitemaps.org

- Learn about sitemaps – Google

- Build and submit a sitemap – Google

- How to submit sitemaps – Bing

- Submit your sitemaps to search engines – Yoast

- PubSubHubbub – PubSubhubbub

Next steps?

When I started writing I didn’t plan to have this become everything I know about sitemaps. But what did I miss? What optimizations can we apply to sitemaps in order to get better insights, speed up the crawling of pages. This is just one of the areas of technical SEO but probably an important one if you’re looking for deeper insights into what Google or Bing think about your site. If you have questions or comments, feel free to give a shout on Twitter: @MartijnSch